wikiHow is a “wiki,” similar to Wikipedia, which means that many of our articles are co-written by multiple authors. To create this article, volunteer authors worked to edit and improve it over time.

This article has been viewed 126,598 times.

Learn more...

The sensitivity of a test is the percentage of individuals with a particular disease or characteristic correctly identified as positive by the test. Tests with high sensitivity are useful as screening tests to exclude the presence of a disease. Sensitivity is an intrinsic test parameter independent of disease prevalence; the confidence level of a tests sensitivity, however, depends on the sample size. Tests performed on small sample sizes (e.g. 20-30 samples) have wider confidence intervals, signifying greater imprecision. 95% confidence interval for a tests sensitivity is an important measure in the validation of a test for quality assurance. To determine the 95% confidence interval, follow these steps.

Steps

-

1Determine the tests sensitivity. This is generally given for a specific test as part of the tests intrinsic characteristic. It is equal to the percentage of positives among all tested persons with the disease or characteristic of interest. For this example, suppose the test has a sensitivity of 95%, or 0.95.

-

2Subtract the sensitivity from unity. For our example, we have 1-0.95 = 0.05.Advertisement

-

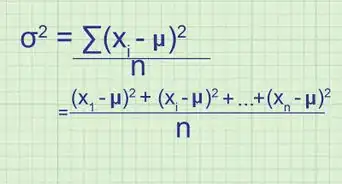

3Multiply the result above by the sensitivity.[1] For our example, we have 0.05 x 0.95 = 0.0475.

-

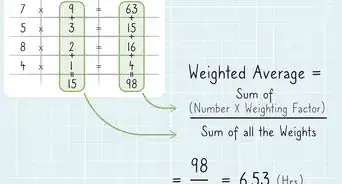

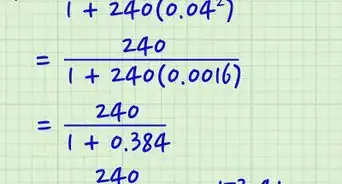

4Divide the result above by the number of positive cases.[2] Suppose 30 positive cases were in the data set. For our example, we have 0.0475/30 = 0.001583.

-

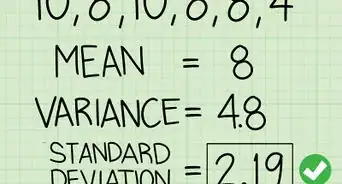

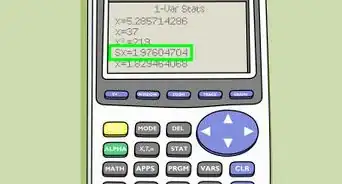

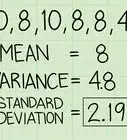

5Take the square root of the result above.[3] In our example, it would be sqrt(0.001583) = 0.03979, or approximately 0.04 or 4%. This is the standard error of the sensitivity.

-

6Multiply the standard error obtained above by 1.96.[4] For our example, we have 0.04 x 1.96 = 0.08. (Note that 1.96 is the normal distribution value for 95% confidence interval found in statistical tables. The corresponding normal distribution value for a more stringent 99% confidence interval is 2.58, and for a less stringent 90% confidence interval is 1.64.)

-

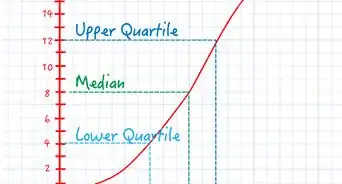

7The sensitivity plus or minus the result obtained above establishes the 95% confidence interval. In this example, the confidence interval ranges from 0.95-0.08 to 0.95+0.08, or 0.87 to 1.03.

Community Q&A

-

QuestionWhat method is used here to calculate confidence intervals?

Community AnswerWork out the average standard deviation for your values and then the confidence Interval = average + and - 1.95 x standard deviation. Usually as most data is normal.

Community AnswerWork out the average standard deviation for your values and then the confidence Interval = average + and - 1.95 x standard deviation. Usually as most data is normal.